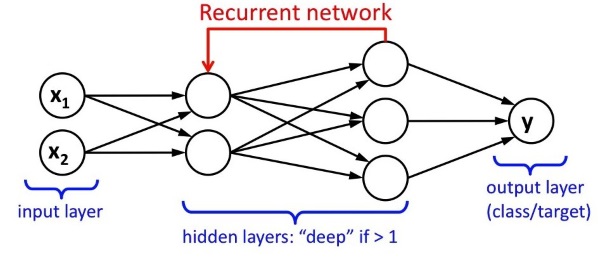

Deep Learning as we know it today, is a set of algorithms based on the workings of the human brain. Most notably, we think of Neural Networks when we hear the term Deep/Machine Learning. Although the theory behind it was introduced in the early 1940’s, the first functional Neural Network called “The Perceptron” was constructed in 1957, and was a two layer network used for pattern recognition. Neural Network are in essence a network of nodes (called artificial neurons) split into different types of layers: an input layer, an output layers, and any number of hidden layers for processing. These hidden layers can either process data sequentially, never communicating back to the previous layer, or can send a feedback to correct the previous steps and repeat.

Theoretical Neural Network

Chess Engines are based more on the algorithms written out by Claude Shannon in his seminal work on the topic called “Programming a Computer for Playing Chess”. He introduced two types of algorithms: type A or brute-force algorithms that just calculate every possible move and evaluates this move for effectiveness, and type B or intelligent search algorithms, where certain possible moves are discarded from the evaluation branches (using for example his minimax algorithm) because they are known to be bad in order to reduce the calculation effort needed. This is closer to how a human would calculate good chess moves. And in more recent years, with all improvements for computers in place, the strategy of modern day chess engines tends to even strongly favor the type A approach.

The book is mostly about the experiences of the former world champion when battling an opponent not plagued by the psychological aspects of the game, and thus from an AI point of view not that relevant, but there are some key concepts that he mentions in the book, which I found the have a bearing on my understanding of AI and RPA. These reflections on his own experiences are by no means uninteresting, but I’d rather state this so the reader knows what to expect when he picks up the book.

The first interesting concept I came across in the pages, is Moravec’s Paradox: the discovery by artificial intelligence and robotics researchers that, contrary to traditional assumptions, high-level reasoning requires very little computation, but low-level sensorimotor skills require enormous computational resources. (Taken from Wikipedia) Garry Kasparov correctly noted this to apply to chess engines as well: They have an easier time with tactical calculations than they do with physically moving the pieces. What computers are strong in, humans are weak in, and vice versa. When we look at for example the maneuverability of drones, RPA might strive to alter this, but we are not quite there yet.

Another topic computers are bad in, is to determine context. In essence, each piece of new data necessitates an entire recalculation of the circumstances. IBM’s Watson has the capability to understand natural language, but we can all safely say that this technology still has some ways to go. Just ask Google Translate to translate an entire phrase. In the world of Natural Language Processing (NLP), there are three areas that play a role, and the idea of “context” is situated with the two latter. These are evidently also the most difficult for the computer to analyze:

- Syntax: What part of given text is grammatically true?

- Semantics: What is the meaning of given text?

- Pragmatics: What is the purpose of the text?

These types of systems needs a lot of info to determine any sort of proper context. Although Douglas Hofstadter didn’t accurately predict the loss of humans in chess in his book “Gödel, Escher, Bach: An Eternal Golden Braid”, he did indicate that this human cognition is a hard skill to master for IT systems.

When it comes to improving on the design of Deep Blue, the book states that after the second round of games, where it beat the world champion, the opportunity cost to improve the chess computer was just too high. It had served its purpose as a Marketing stunt by IBM, and was decommissioned almost immediately after. Even with later chess engines, putting a focus on optimization for too long, causes stagnation (as is the case with for example business processes or value chains). At some point, you need to build a new vision from the ground up to get better results. Also, demand can only be stimulated by product diversification for a limited time. A realization that Max Levchin (former CIO of PayPal) made about Silicon Valley and tech startups as well (as indicated in the book).

The book advocates one idea for the future though. As Garry Kasparov talks about his experiences with chess games where man+machine is the theme, it is clear that man+machine still beats chess engines without human collaboration. Drawing this parallel to other fields, it is also clear for me that we are moving to a world where AI assisted work is going to be prevalent. And it will enable us to augment our productivity as a society.

| Review | Artificial Intelligence |