Verifying your Service Design and Implementation

28th of December 2018The main purpose of Service Oriented Architecture (SOA) has always been to move from monolithic, siloed, and often archaic/legacy systems and applications to a highly distributed and loosely coupled design of smaller components/service where we accept a difficulty increase of management and monitoring in exchange for independent scaling, extending and deploying of functionalities. This was the underlying driver for introducing classic SOA. It remains the underlying driver when going full Micro Services in any organization.

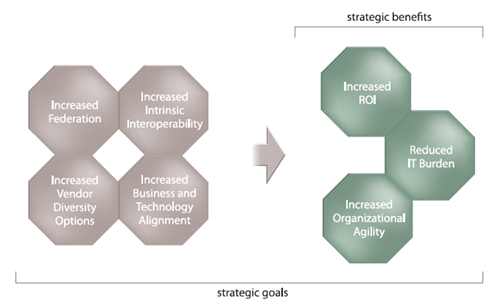

To delve deeper into these drivers for SOA, I paraphrase Thomas Erl’s concepts for classic SOA spread across his many books on the topic. Service-orientation emerged as a design approach in support of achieving the following goals and benefits associated with SOA and service-oriented computing:

- Increased Intrinsic Interoperability: Services within a given boundary are designed to be naturally compatible so that they can be effectively assembled and reconfigured in response to changing business requirements.

- Increased Federation: Services establish a uniform contract layer that hides underlying disparity, allowing them to be individually governed and evolved.

- Increased Vendor Diversification Options: A service-oriented environment is based on a vendor-neutral architectural model, allowing the organization to evolve the architecture in tandem with the business without being limited to proprietary vendor platform characteristics.

- Increased Business and Technology Domain Alignment: Some services are designed with a business-centric functional context, allowing them to mirror and evolve with the business of the organization.

- Increased ROI: Most services are delivered and viewed as IT assets that are expected to provide repeated value over time that surpasses the cost of delivery and ownership.

- Increased Organizational Agility: New and changing business requirements can be fulfilled more rapidly by establishing an environment in which solutions can be assembled or augmented with reduced effort by leveraging the reusability and native interoperability of existing services.

- Reduced IT Burden: The enterprise as a whole is streamlined as a result of the previously described goals and benefits, allowing IT itself to better support the organization by providing more value with less cost and less overall burden.

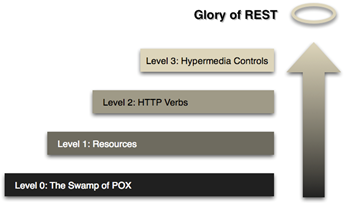

When designing architectures and contracts for your services, be sure to check out the O’Reilly book REST in Practice by Jim Webber, Savas Parastatidis, and Ian Robinson. Throughout its chapters, it explains in a progressive way how to design and implement your services with the REST principles stipulated in Leonard Richardson’s restful maturity model so that they can form a coherent distributed system that can be scaled for purpose, and is robust towards changes in its application and services landscape.

Richardson’s Restful Maturity Model

In order to improve the robustness of REST services, a cue was taken from Jon Postel’s playbook. Postel’s Law was part of the early specification for the Transmission Control Protocol (TCP), where he stated: “Be conservative in what you do, be liberal in what you accept from others”. The movement that started with the introduction of Micro Services as a viable alternative to classic SOA coopted this statement by rewording it to say: "Be conservative in what you send, be liberal in what you accept". This became the basis for the Tolerant Reader pattern and works both ways: When a client retrieves data from a service, it might get more data than it bargained for, and needs to be able to ignore this excess of data. But when a client posts data to a service, the onus is on that service to be able to do the same, as the payload may contain data the service is not interested in. This is a common occurrence when different services process a payload that is posted on a topic they are subscribed to.

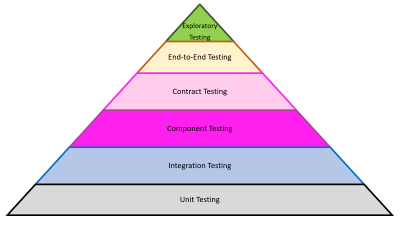

An integral part of designing complex systems such as a Micro Services stack across an organization, is to implement proper testing. The testing strategy for the functionalities of developed software has always been represented as a pyramid with those tests closest to the lines of code (what is called unit testing) being very extensive, and the more coarse-grained the tests become, the lower their number. This is done mainly because the higher up the abstraction chain you go, the more brittle these tests become. Next to being brittle and needing a lot of rework each iteration of the code, they are usually also very expensive to execute. The testing pyramid can be seen in multiple sources (white papers, blog posts…) and tends to have comparable test types with slight variations depending on the expert drawing it. An example test pyramid can be seen in the diagram below.

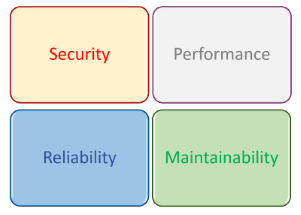

Aside from testing the functionalities, we also want to verify whether the non-functional requirements for the software has been met. There is no clear hierarchy for the different types of non-functionals (based on the ISO 25010 standard), so instead of a pyramid we employ a testing quadrant. A hierarchy can be derived for these requirements if such a hierarchy is present via Service Level Agreements (SLA) or similar indicators of importance. For most of these test types we can imagine their typical form: Penetration testing for security, Load/Stress/Capacity testing for performance, and Regression Testing for Maintainability.

New test types come from the ability to deploy components/services faster and independent of each other. Reliability concerns around business continuity and fault tolerance can be met by new types of testing such as A/B Testing, Canary Testing and Chaos Testing. There has been a shift in the testing paradigm surrounding these test types in that in most instances these tests are executed in Production rather than in a designated test environment. More on these types of testing in later thoughts.

One of the relatively new types of testing is Consumer-based testing, which can be considered a form of Contract Testing. This type of testing feels a bit unusual as we tend to strictly guard our project code (this includes the automated tests written for the project) within the confines of the team working on the project. Read-Only access is mostly given to other teams wanting to “borrow” written code for instructional or reuse purposes, but to allow teams that are going to consume your services to write tests and add them to your test batches is asking for a switch in philosophy for more established teams. These tests will guard the consumer services of our stakeholders from breaking changes when they are deployed into the production environment. If these breaking changes are indeed needed and cannot be mitigated through versioning strategies or other means (such as for example the afore mentioned Tolerant Reader pattern), at least our consumers will be alerted upfront during the testing phases of the project. These tests will serve as an insulation layer between the consumers of your services and your deployments. When using a contract-first design strategy, these tests might even become tools in a Test-Driven Design (TDD) approach.

These Consumer-driven contracts are a pattern that is used to address the evolutionary changes of the services being developed. As your service implementations continue to change, these contracts form the basis for business continuity through regression testing of your new versions. They give the provider of the service insight into the requirements that his consumer impose on the service, allowing him to make changes without the need for constant worry of affecting his consumers, and gives an indication when a breaking change will necessitate more elaborate planning and conversation.

Just to clarify: The contract testing will not only concern itself with the interface (combination of operations and data structures for input/output parameters), but also with secondary traits such as Quality of Service attributes and service policies (such as security and transaction information). These secondary traits were more explicit in SOAP services (implemented by the various WS-* specifications), but can be equally well implemented in REST services through the use of headers.

With all these test categories and types the danger exists that duplicate tests are written. Duplication here is meant as the testing of the same requirements (either functional or non-functional). The same rules that apply for production code apply for test code: Strive for simple code and avoid duplication! Especially in the testing pyramid for functional testing the following rules (as specified by Martin Fowler) should always be adhered to:

- If a higher-level test spots an error and there's no lower-level test failing, you need to write a lower-level test (if possible).

- Push your tests as far down the test pyramid as you can!

| Thought | SOA | Testing |